EchoView

Video Showcase

Introduction

EchoView is an educational XR experience, designed for people with low-hearing capabilities, that visually guides users to an audio source, which is emitting sound nearby them. This experience also features a mini-game, which is be accessible to everyone. In particular, the mini-game challenges the user to find the audio source without the aid of visual feedback.

The problem detected was centered on the challenges that people with hearing impairments face in responding to sound interactions. In everyday life, people are often immersed in environments that have multiple sound interactions. For instance, a person in a room could be able to hear other people trying to speak to him, objects falling, a door opening, etc. These actions seem normal to most of us. However, those may be very hard tasks for people that do not have full hearing capabilities.

The proposed solution is valuable because it is a simple tool that can help people to try to overcome this problem. EchoView is capable to detect audio feedback from all the directions near the user, and give a visual feedback for him. Moreover, the minigame implemented in the experience is also valuable, as it acts as an educational experience for people which do not have hearing impairments. In fact, the minigame requires all people to wear noise-cancelling headphones, and its goal is to try to make the user guess where the audio stimuli is coming from, without the aid of EchoView's visual feedback.

Design Process

Initial research

Since the project was developed in a short time frame, the research was only based on reviewing existing literature to find out if a project like this is feasible. In a paper focused on Head-Mounted Display Visualizations to Support Sound Awareness for the Deaf and Hard of Hearing, the results indicated that that XR visualizations help people with hearing impairments by making it easier to identify speakers and sounds, though preferences and social settings affect their usefulness. As the paper further suggests that future work in this field is neccessary and required, we were convinced to pursue this project further.

Hardware design

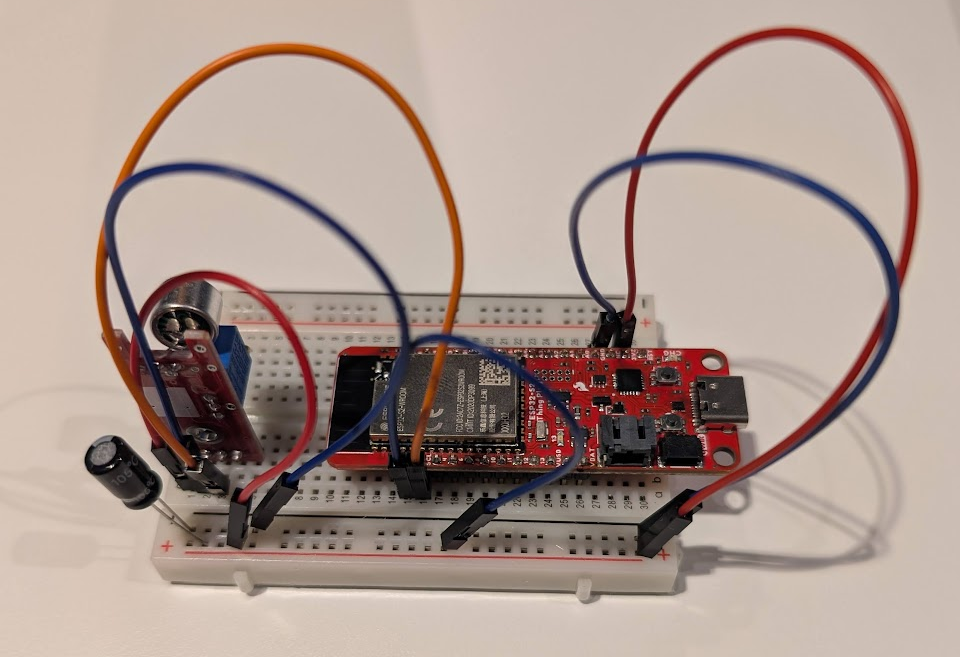

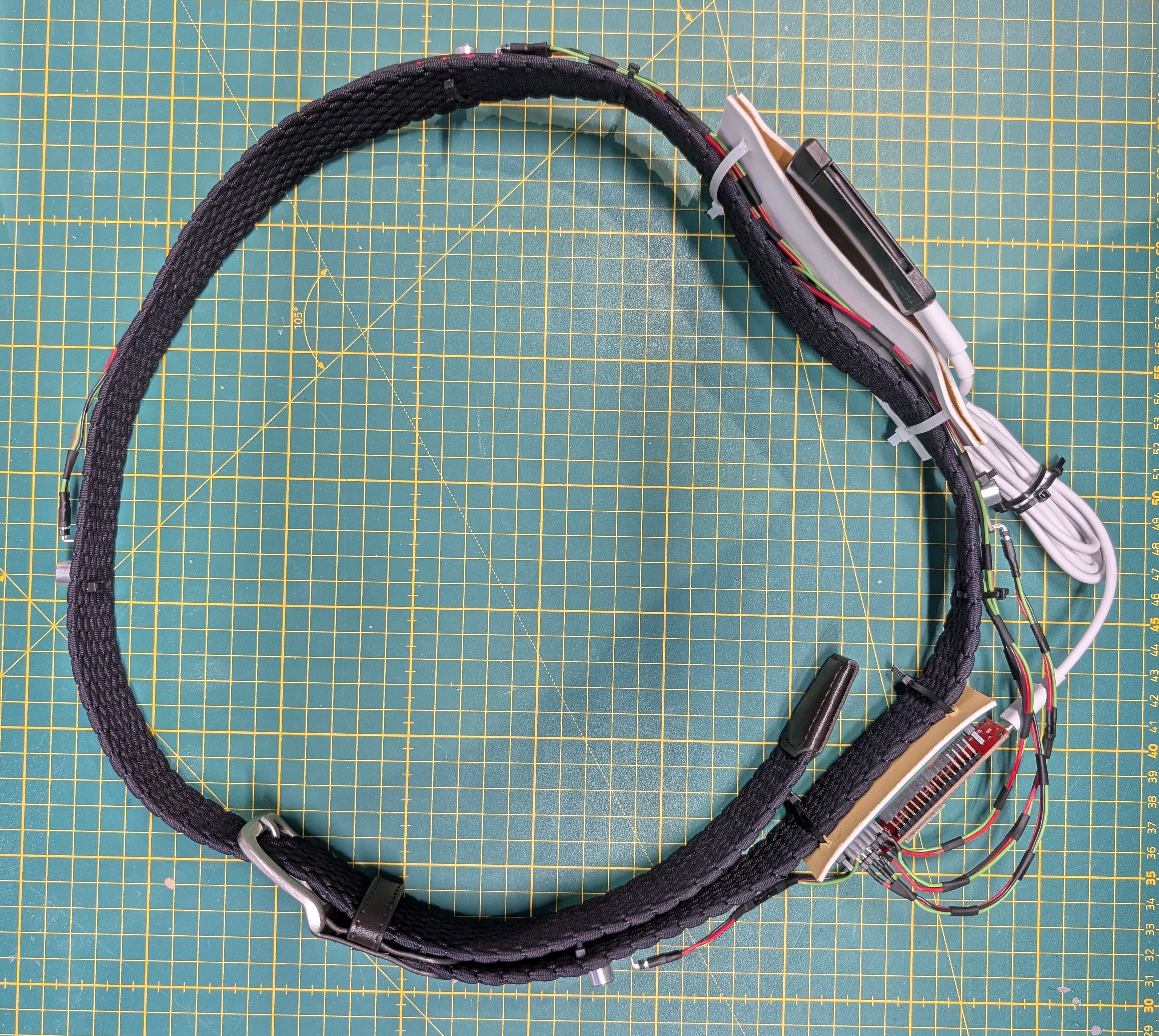

The hardware was designed in two separate parts, which were merged in the end to create the finished product. These are the wearable prototype, which was made out of cardboard and had the microphone mounted with cable ties, as well as a breadboard which was made to prototype the electronics.

As soon as one microphone output was obtained and could reliably detect audio peaks produced with a knife and glass, a custom board was made to accomodate all 4 microphones. The microphones were then mounted on the prototype cardboard belt, and peaks were recorded for each microphones, which producted the following pattern. This was deemed feasible, and the final prototype was produced, consisting of a real belt, and all the components securely mounted with cable ties.Unity scene design

The design of the Unity scenes began with initial sketches. Originally, our concept for the Main Scene featured a single visual feedback element at the bottom of the scene. However, we later opted for two separate feedback elements—one on the left and one on the right—for better spatial awareness.

For the Game Scene, our initial plan was to allow the button to be pressed using hand tracking. However, we ultimately chose to use a controller instead, as it also provides haptic feedback.

Intended Users

There are mainly two kinds of people that EchoView is targeting: Deaf, Defeaned or Hard-Of-Hearing People, for whom the application has the potential to help aide them in tasks where knowing the source of a sound is of importance; as well as people without disabilities that wish to gain a higher sense of empathy towards those with them.

We made several design decisions based on the unique needs and challenges that these groups had. These include:

-

For Deaf, Defeaned or Hard-Of-Hearing People, we recognised that the main use of EchoView would be to help aid them in situations that require them to know quickly where and if a sound was made. As such, one of our design decisions was to develop the application in XR, utilising Meta's built-in Passthrough Vision support, instead of in simple virtual reality. We also developed the software in a way that is fully accessible to them, without ever relying on sound to convey information either in the menus or in the game proper.

-

For People without Disabilities, our main design focus was making sure that the experience created a sense of empathy in them towards people that do face disability. To facilitate that, we developed an additional minigame mode (detailed below) that is meant to give these users a simple task to do whilst having to rely only on the sound signals coming from EchoView (by blocking outside sound through noice cancelling headphones).

An additional minor design consideration was to be careful across the experience and the documentation with the language used to describe peoples that have some sort hearing impairment. To do so, references such as the Stanford Disability Language Guide were utilised.

System description

- Real-time Audio Visualization: The application provides intuitive visual feedback to represent audio detected by an array of microphones in real time.

- Intuitive Visual Feedback: Two animated visuals appear on the left and right sides of the user's field of view. Their colors and animations change based on the position and distance of the detected sound source, making the feedback immediately understandable.

- Interactive Minigame: Includes a button that users can press using ray interaction with the right controller to guess the real-world position of the detected sound.

- Meta Quest Compatibility: Fully compatible with all Meta Quest headsets starting from the Quest 2.

Building & Installation

Building the ESP32 project

Prerequisites

- The Arduino IDE (v2.3.4)

- The ESP32 package (v3.1.1)

- The WebSockets library (v2.6.1)

- The WiFiWebServer library (v1.10.1)

Deploying to an ESP32 Board

- Open the project file

EchoView-ESP32.ino; - Open the

header.hfile and initialize the variablesssidandpassword(lines 3 and 4) with your network name and password; - Compile the project by clicking the

Verifybutton; - Connect the board to the computer and select the correct port;

- Send the project to the board using the

Uploadbutton; - Open the serial monitor and set the baud rate to

115200 baud; - Copy the IP address that will be printed once the connection is established.

Building the Unity project

Prerequisites

- Unity (v2022.3.56f1) with the Android Build Support

- The following Meta packages (v72.0.0):

- Meta XR Core SDK

- Meta MR Utility Kit

- Meta XR Haptics SDK

- Meta XR Interaction SDK

- Meta XR Interaction SDK Essentials

- Meta XR Platform SDK

- Meta XR Simulator

- Note: Installing the

Meta XR All-in-One SDKpackage may cause conflicts withNativeWebSocket.

- The NativeWebSocket package (v1.1.5)

Deploying to a Meta Quest Headset

- Open the project

EchoView-Unity; - Go to

File->Build settings, selectAndroidand then press theSwitch platformbutton; - Inside the Unity editor, double-click on the

Scenesfolder and open theMainScene; - Select the

WebSocketClientManagerobject in the scene hierarchy and paste the ESP-32 IP address in the appropriate field in the inspector panel; - Click the

Buildor theBuild and Runbuttons and choose where to save the build files (e.g. create a folder namedBuildin the project directory), write the build name and press theSavebutton; - If you have pressed the

Buildbutton, install the APK on your headset (e.g. runningadb install PATH_TO_THE_APK).

Usage

Microphone Belt

Connect the powerbank and the ESP32 via a USB-C cable. The ESP32 should automatically start sending data to the WebSocket.

Main Menu

When the application starts, the user is welcomed by the main menu, displaying the experience logo and a prompt to wait for the experience to begin. During the demonstration, team members will manage the progression of the experience.

The user can advance to the next scene by pressing the trigger on the left controller.

Main Scene

The Main Scene provides the core experience, allowing users to visualize audio detected by the microphones on the belt. The visualization adapts based on two key factors:

- Direction:

- If the sound originates from the left or right, the corresponding side will illuminate.

- If the sound is detected in front or behind the user, both sides will light up.

- Front and rear sounds are distinguished by different colors.

- Distance:

- Nearby sounds appear green.

- Mid-range sounds appear yellow.

- Distant sounds appear red.

- If the noise is detected in front of the user, it always appears green.

- The speed of the animation also changes based on distance.

The user can advance to the next scene by pressing the hand trigger on the left controller.

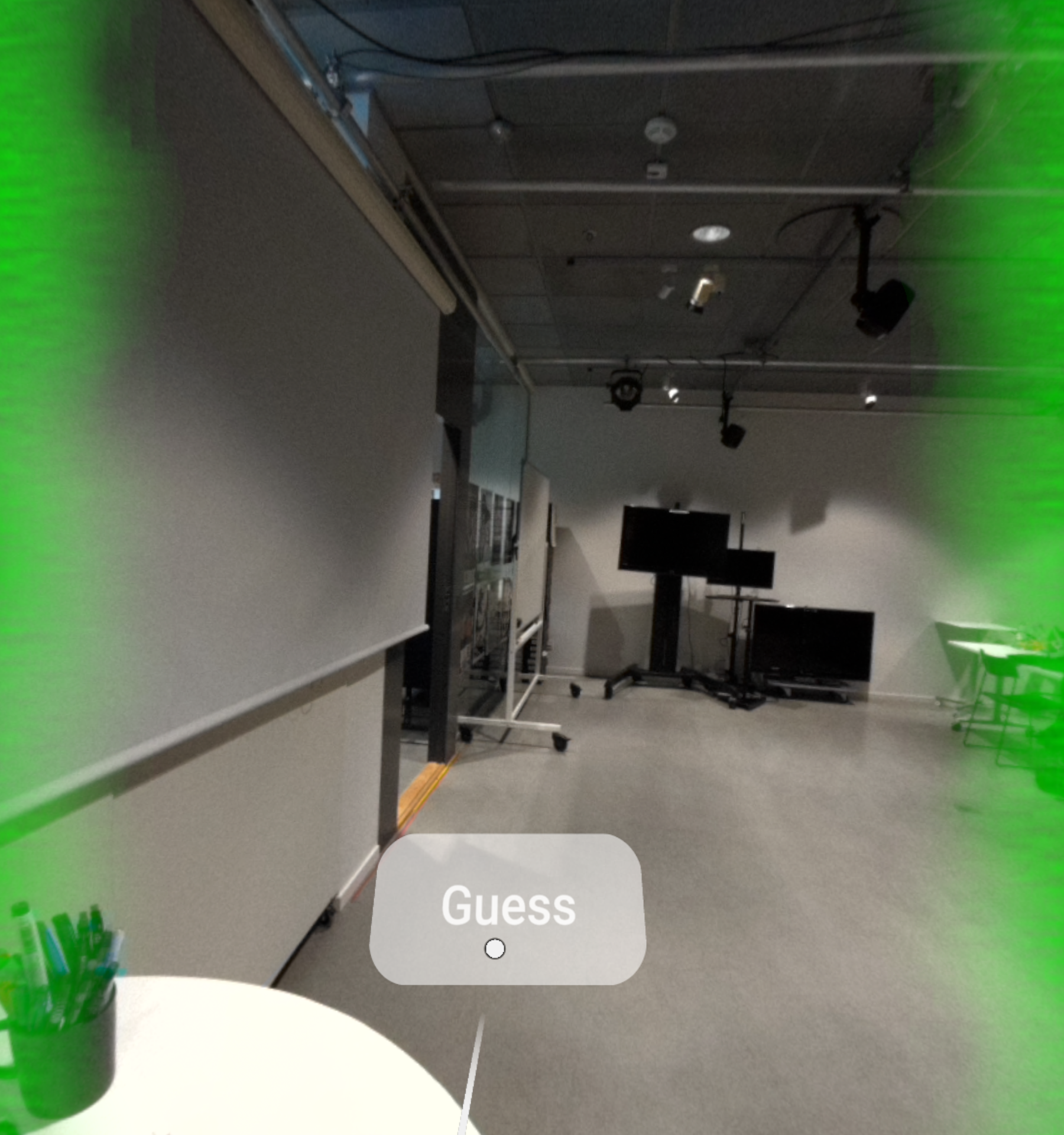

Game Scene

The Game Scene is designed for demo sessions, where the team members generates sounds from different directions, and the player must determine the source of the noise. Unlike the Main Scene, no visual feedback is provided in real time. Instead, the player must listen carefully and make a guess by pressing the Guess button. Once the button is pressed, visual feedback will be displayed for a few seconds to indicate whether their guess was correct.