DIDECT2S

Digital Detectives Comprehensive Tor Toolset (DIDECTS) - A toolset for categorising, annotating, and saving text excerpts of .onion sites. Includes archiving, data analysis, and visualisation tools to make the data useful.

Part the PDTOR reseach project: https://www.nordforsk.org/projects/police-detectives-tor-network-study-tensions-between-privacy-and-crime-fighting

DIDECT2S consists of six different tools: D3-Annotator, D3-Centraliser, D3-Collector, D3-Analyser, and D3-Visualiser. Each tool is explain in further detail below.

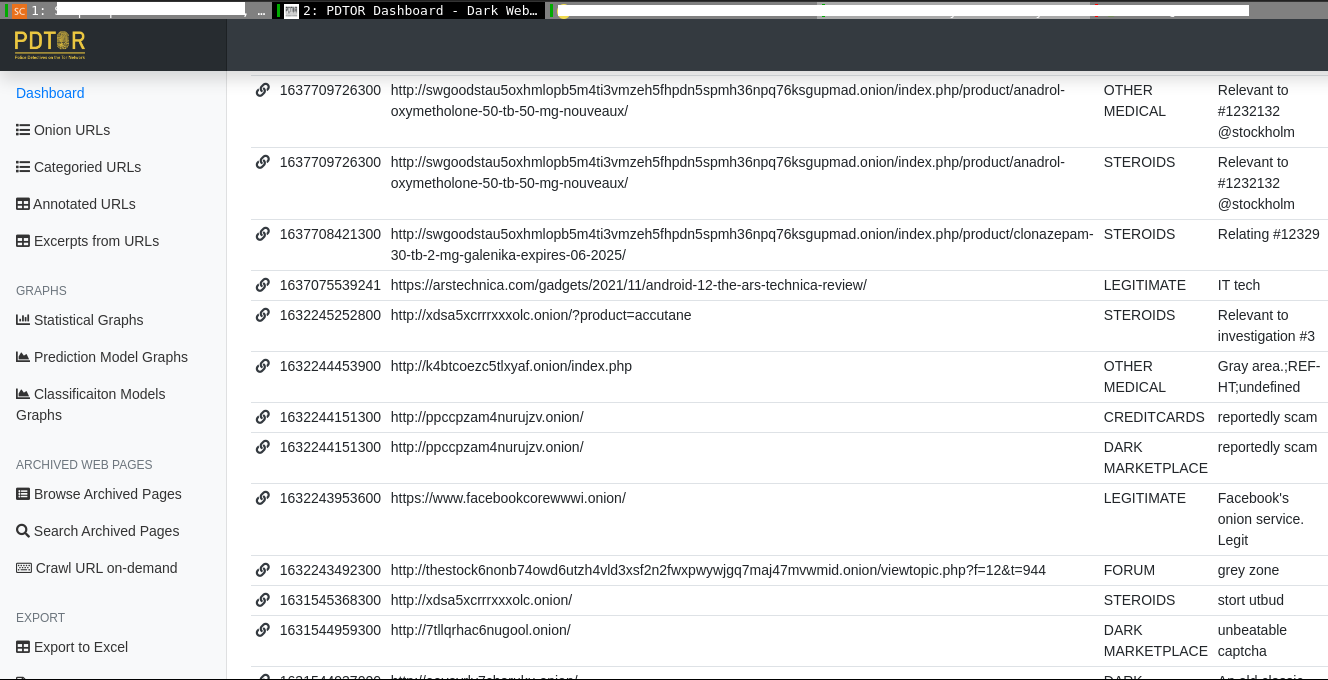

Web Portal (D3-Visualiser)

This is the front-end of the DIDECT2S toolset. This is where users can see the data in the database, request scraping jobs to be carried out, download archives of scraped webpages and more.

Fig. 1 - The DIDECT2S web portal powered by the code from D3-Visualiser.

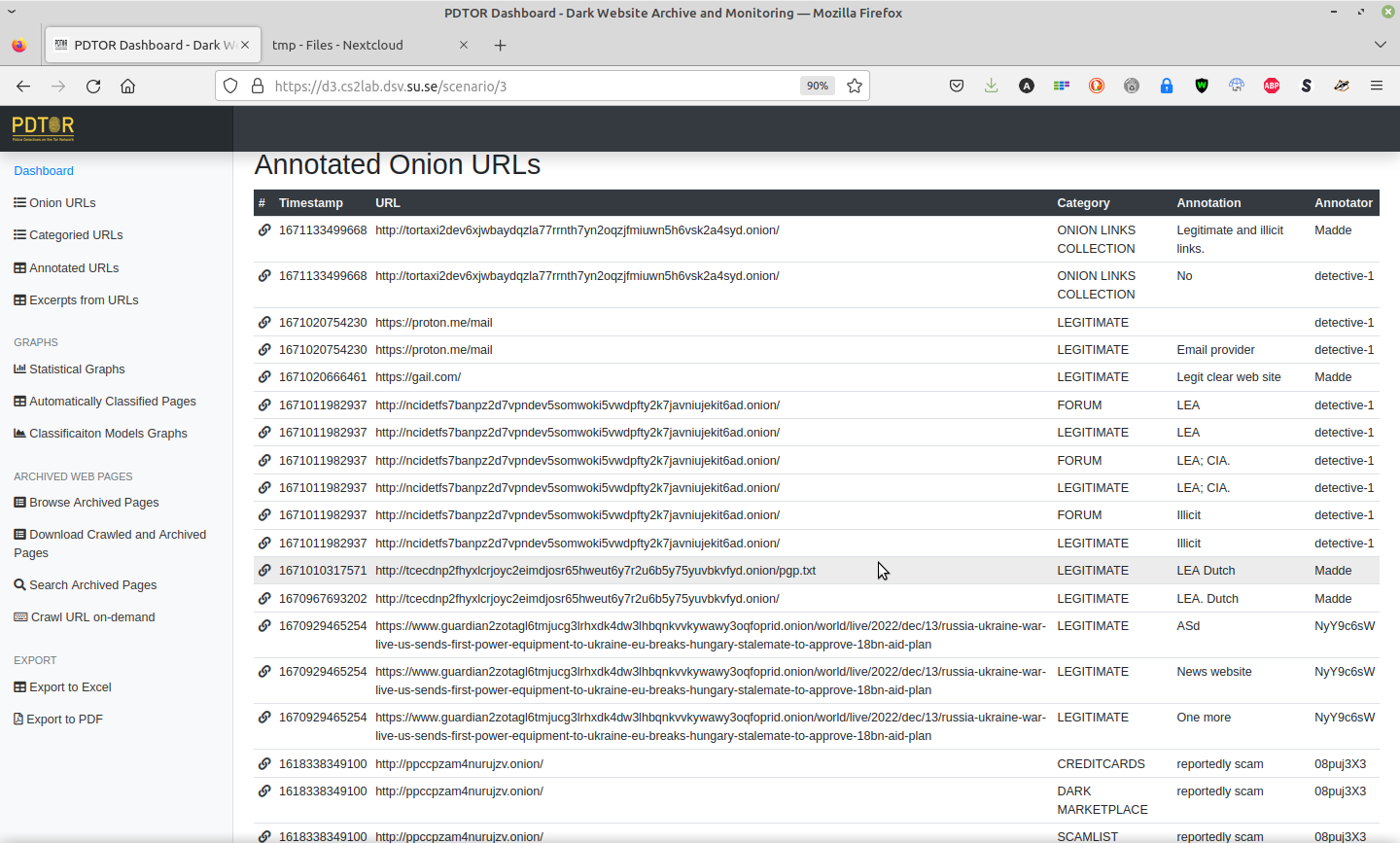

Annotation/Categorisation/Labelling Tool (D3-Annotator)

This is basically a Firefox Add-on that enables users to annotate and categorise (label) web pages. The add-on communicates with a central server that stores all the data into a database. The idea is to provide a system for multiple users to create a high-quality database of labelled webpages.

Fig. 2 - The Tor Browser add-on called D3-Annotator, that is used to mark text, categorise, and annotate web pages.

Fig. 3 - Once a web page has been annotated or categorised, it is inserted into the database and can be viewed in the web portal.

Web Scraping Tool (D3-Collector)

There are two scrapers available: one simple scraper (Scrapy-based) for fetching pages that do not require authentication, and one more powerful scraper (Selenium-based) for authentication protected webpages. The Selenium-based scraper takes requests from the web portal (see the animation below) and can be interacted with during runtime.

Crawl on Demand

If a user wishes to crawl a certain URL, it can be requested from the web portal. Some options like delay before the scraping takes place can be set as well. If the user wants to interact with the browser before or while the scraper is running, that can be done by opening the crawler window as demonstrated in the animation below.

Fig. 4 - Crawl on demand is available via a virtual machine accessed through HTML5 remote desktop client Apache Guacamole.

Download Scraping Archive

Once the crawler has finished the job, the files care compressed into a .tar.gz archive that can be downloaded from the Guacamole file transfer interface by pressing SHIFT+ALT+CTRL (see animation below).

Fig. 5 - After the scraping task is done, a .tar.gz archive of the fetched webpages can be downloaded from the built-in Guacamole file transfer menu.

Download Scraping Archive from web Portal

As an alternative, the .tar.gz archive can be downloaded from the web portal as well. The textual content has at this stage already been inserted into the database.

Fig. 6 - The .tar.gz archives can also be fetched from the web portal

Analysis Tool (D3-Analyser)

This is basically a machine learning (Scitkit-Learn) based script that produces classification models based on the data in the database so that when new pages are being fetched by the scraper, they can be automatically categorised. This tool also includes an annotation agreement calulcation algorithm. For now it is Cohen's kappa, but it should be replaced by Krippendorpf's alpha (or similar) in the future.

Contact

Please get in touch if you are have any questions or ideas. The technical setup is a bit complicated but I would be happy to explain it to you if you are interested.

N.B. The code is not beautiful and it is not at latest development version.

Licence

Copyright Jesper Bergman (jesperbe@dsv.su.se)

GPL-3 (see LICENCE file)